The first Globe to Gates Newsletter said it best. Let's imagine the world as a globe. How can we capture all the processes–all of what is happening on the globe–in software and hardware? This is the essence of geospatial computing; to capture the happenings.

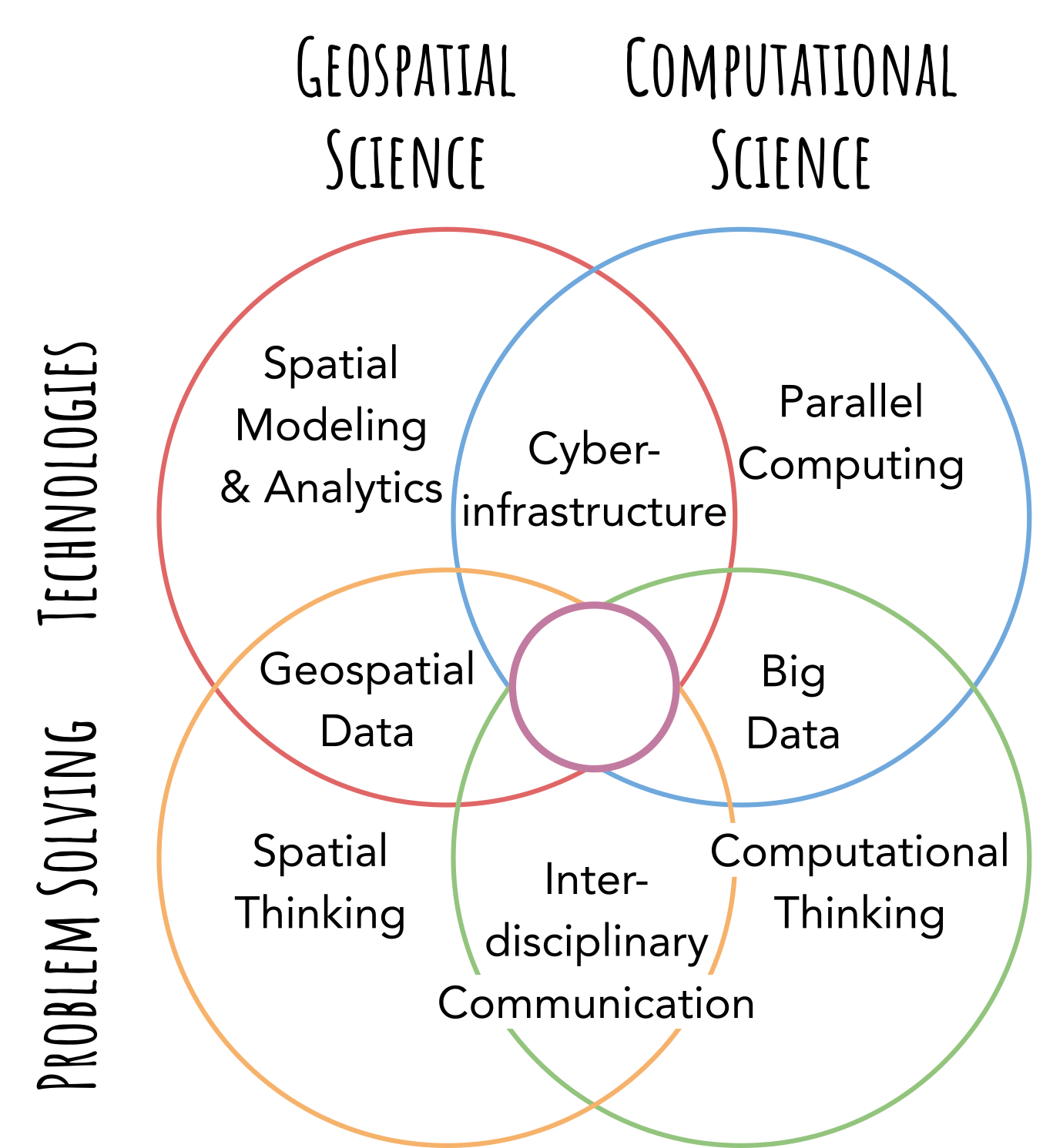

Geospatial computing intersects multiple knowledge and skill dimensions. Technology. Problems. Computational Science. Geospatial Science. You have to think carefully about capturing the world in the 1s and 0s of a computer and then have the skills to do it.

There are many questions to answer.

On the geospatial side, we must ask. How do we model and analyze geospatial phenomena? How do we represent and manipulate geospatial data? How do we think spatially about our problem?

On the computational side, we must ask. How do we use advanced computing technologies? How do we handle data, especially when it grows? How do we think computationally about our problem?

But wait. There is one last question. What do I need to know?

Glad you asked! Here are the eight knowledge areas of geospatial computing.

Cyberinfrastructure

Parallel Computing

Big Data

Computational Thinking

Interdisciplinary Communication

Spatial Thinking

Geospatial Data

Spatial Modeling and Analytics

Mastering one area is standard. But, professionals in geospatial computing should have a basic understanding of all eight areas to capture the world in a computer. We call this basic understanding across all eight areas Cyber Literacy for Geographic Information Science.

This essay is Part 1 of a series. First, let us take a peek at four of the eight knowledge areas. We will focus on the computational side this week. Next week's newsletter will concentrate on the remaining four. Finally, in a third newsletter, we will take a step back and look at the dimensions and intersections. So stay tuned.

Cyberinfrastructure. The NSF Blue Ribbon Advisory Panel on Cyberinfrastructure (CI) used an analogy to describe the goals of CI. If physical infrastructure (e.g., roads, power grids, rail lines) enables the industrial economy, cyberinfrastructure will empower the knowledge economy. In the 84-page report, they make an interesting observation. "Although good infrastructure is often taken for granted and noticed only when it stops functioning, it is among the most complex and expensive thing that society creates." (pg 5, NSF Report). Cyberinfrastructure is the most complex and expensive computational glue ever created. In theory, it sandwiches computation, storage, and communication components with software, services, instruments, data, and domain expertise.

Knowledge in this area focuses on leveraging computational and data infrastructures to solve problems. Powerful computational infrastructures such as supercomputers or cloud computing platforms can provide the computational horsepower for complex models and analytics. Spatial data infrastructures can help professionals wrangle data and make them available to targeted audiences. Gateways and online portals can hide the complexities of spatial modeling and analytics, parallel computing, and big data management. Understanding the pitfalls of these technologies and how to unlock their potential for geospatial problem solving is the essence of this knowledge area.

Parallel Computing. The world is a complex place. Spatial modeling and analytics are rapidly advancing to make sense of it. Unfortunately, more complex models and analytics demand more computation. On top of that, computing technology has hit an energy wall. Chips cannot go faster because we cannot efficiently dissipate the heat that comes with this speed. So instead of building up, chip creators are building out. Multi-core processors have been and will be the way of the future. Instead of one fast core for our computations, we will get many slower cores.

Geospatial computing professionals now need to understand how to compute in parallel. Knowledge in this area allows professionals to decompose geospatial problems, data, and methods. It requires skills in developing inter-processor communication strategies to coordinate computation across multiple cores. Parallel computing will enable us to scale computation to match the complexity of the problem. In short, parallel computing knowledge eliminates computation as the limiting factor in solving geospatial problems.

Big Data. The world is a big place. Societies around the globe have filled it with instruments and technologies that stream data 24/7. Precipitation gauges. Seismic monitors. Traffic cams. Satellites. Cell phones. All collect data. Understanding how to store, manage, and move these data is crucial to unlocking mysteries deep within.

The Trillion Pixel GeoAI Challenge hosted by Oak Ridge National Lab (ORNL) puts this knowledge area into perspective through a simple challenge. Satellite constellations can take daily one-meter resolution imagery of the entire Earth's surface. That is over a trillion pixels! The challenge: how to make sense of a trillion pixels in 24 hours? Before the next trillion comes streaming in. The trillion pixel challenge highlights the core goal of this knowledge area, how to transform big data into information and insights (in a timely manner).

Computational Thinking. Ultimately, geospatial computing pros must express their thoughts and theories in computer software and hardware. There are many facets behind computational thinking. The art of computational abstraction involves striking the right balance of simplicity and complexity in a computer program. It can include honing your ability to select and even develop algorithms. Computational thinking can help you automate tasks to save time and enhance replicability. Appreciating computational complexity and that computation can explode by adding just a little more data.

Perhaps most importantly. Computational thinking helps us understand the current limitations of computers. And how to go around them. Limitations invite creativity. Professionals in this space are constantly finding new pathways around computational limitations. Every discovery is a new peak, which quickly becomes plateaus. But, then, they are flat ground for us to see yet another ridge and keep striving for new heights.

What is the difference? The difference between a computer scientist and a geospatial computing pro is how they take their knowledge in these four topics and intersect them with the geospatial side. There are subtle and not so subtle challenges. Spatial dependency can confound spatial analytics. Geospatial data in different coordinate systems may not align. Spatial, not computational, scales may present new limitations.

Next week, the saga continues. I will share Part 2, covering Spatial Modeling and Analytics, Geospatial Data, Spatial Thinking, and the elusive Interdisciplinary Communication.

Can't wait until next week? If you want to learn even more, then check this out. Twelve outstanding scholars (and me) wrote a journal article on this subject. In the article, we outline the eight core knowledge areas, establish a framework for teaching and learning, and create a competency matrix to evaluate levels of cyber GIScience literacy across the eight knowledge areas. Click the link to the article below. Or send me an email (eshook@gmail.com) if you want more information.

Shook, Eric, et al. "Cyber literacy for GIScience: Toward formalizing geospatial computing education." The professional geographer 71.2 (2019): 221-238. [Journal article link]

Before you go, I have two favors to ask.

First, please let me know which topics you find most interesting in the poll below. After this multi-part series, I plan to write introductory explainers and technical deep dives. I need to pick the first area so let me know your favorite.

Second, if you are interested in this content or know someone interested in the computational side (or the upcoming geospatial side), please click “Share Globe to Gates” below so you can all stay up-to-date with the latest essays.